I have a lab that includes:

- Two standalone SQL Server 2014 instances

- One standalone SQL Server 2017 instance running on Linux

- Thee 2016 instances that are part of an availability group

- A 2016 failover cluster instance with two nodes

- A domain controller

- A file server that also hosts an iSCSI target

- A WAN emulator to simulate network latency and congestion

Items two through six are hosted on a Hyper-V server, while everything else is on standalone hardware. Item seven us useful for testing network connections between geographically isolated data centers.

Until today I was having issues finding a way to realistically simulate network outages between availability group nodes, since they reside within the same Hyper-V host. Finally today something in my dim little brain clicked. All I had to do was bind each of the nodes in my availability group to is own physical network interface.

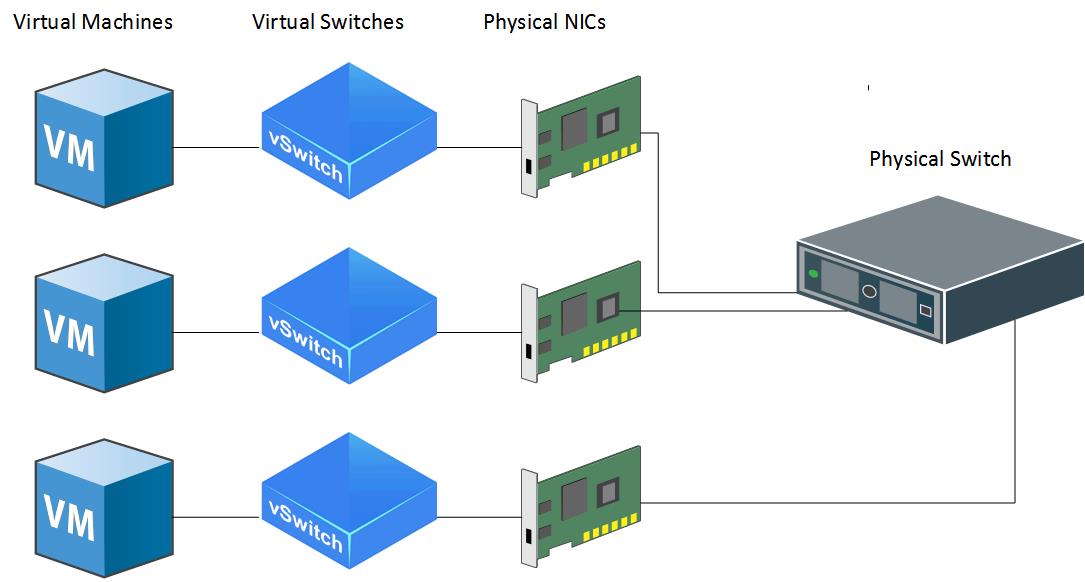

To accomplish this I found a couple of spare, dual Ethernet NICs, installed them the Hyper-V Host, and connected each port to the (physical) desktop switch. From there I booted the server, went into Virtual Switch Manager, and created three virtual switches, one for each port. After that I selected two guest OS instances for modification. On each instance I removed the existing network adapters and created a new network adapter tied to one of the new virtual switches. Here is a simplified drawing of the layout:

With this new configuration, creating a network outage is as simple as disconnecting a network cable. With two cables disconnected on a majority-node, three-node cluster, I was able to lose quorum (as expected) and watch the availability group role shut down as expected. Restoring network connectivity produced the expected behavior. Alternately, I was able to use failed quorum scenario to perform a forced quorum recovery.